Planning for a migration

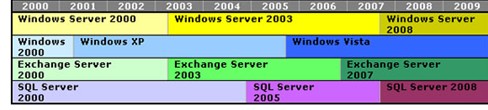

Planning for product life-cycles necessitates an implementation strategy. Migration of computer systems has evolved from the manual process of a complete rebuild and then copying over the data files to an intelligent method of transferring the settings of a particular system and then the data files.

Many IT professionals can attest to the fact that there is a large investment in time and fine tuning of new servers. Whether it’s complexity of domain controllers, user and group policies, security policies, operating system patches, and additional services to users – all of these require time to set up. Fine tuning the server after the rollout can be time consuming as well. Once completed, a computer system administrator wants to have the confidence that the equipment and operating system are going to operate normally.

Thought needs to be given as well to the settings and other customization that users have done on their workstations. Some users are allowed to have a number of rights over their machine and can thus customize software installations, default file locations to alternate locations, or can have a number of programs that are unknown to the IT department. This can make a unilateral migration unsuccessful because of all of the unique user settings. The aftereffect is a disaster with users having missing software and data files, lost productivity as they re-customize their workstations, and worst of all, overwritten or lost files.

Deployment test labs are a must for migration preparation. A test lab should include, at a minimum, a domain controller, one or two sample production file servers, and enough workstations, sample data, and users to simulate a user environment. Virtualization software can assist with testing automated upgrades and migrations. The software tools to do the actual migration are varied – some are from operating system software vendors, others may be third party applications or enterprise software suites that provide other archiving functions. There are a number of documents and suggestions for migration techniques (some are listed in the references).

The success of a migration rests on analysis, planning, and testing before rolling out changes. For example, one company with over 28,000 employees has a very detailed migration plan for its users. The IT department used a lab, separate from the corporate network infrastructure, to test deployments and had a team working specifically on migration. The team had completed the test-lab phase of their plan and the migration was successful in that controlled environment.

The next phase was to roll out a test case on some of the smaller departments within the company. The test case migration was scheduled to run automatically when the users logged in. The migration of the user computers to a new operating system started as planned. After the migration, the user computers automatically started downloading and installing software updates (a domain policy). Unfortunately, one of these updates had not been tested. The unexpected result was that user computers in the test case departments were inoperable.

Some of the users in the test case contacted the IT Help Desk for assistance. IT immediately started troubleshooting the operational issues of the problem without realizing that this was caused by a migration test case error. Other users in the department who felt technically savvy tried solving the problem themselves. This made matters worse when one user reformatted and reinstalled the operating system and overwrote a large portion of original data files.

Fortunately for this company, their plan was built in phases and had break-points along the way so that the success of the migration could be measured. The failure in this case was two-fold in that there were some domain policies that had not been implemented on test lab servers, and the effect of a migration plus the application of software updates had not been fully tested. The losses were serious for some users, yet minimal for the entire organization.

For other migration rollouts, the losses can be much more serious. For example, one company’s IT department created a logon script to apply software updates. However, an un-tested line of the script started a reinstall of the operating system. So as users were logging into their computers at the start of the week, most noticed that the startup was taking longer than usual. When they finally were able to access their computer desktop, they noticed that all of their user files and settings were gone.

The scripting problem was not seen during the test lab phase, IT staff said. Over 300 users were affected and nearly 100 computers required data recovery services.

This illustrates the importance of the planning and testing phases of a migration. Creating a test environment that mirrors the IT infrastructure will go a long way toward anticipating and fixing problems. But despite the most thought-out migration, the most experienced data professionals know that they can expect the unexpected. Where can you turn if your migration rollout results in a disaster?

Every IT professional can tell a horror story about an upgrade, roll-out, or migration gone awry. So many factors are involved; hardware, software, compatibility, timing, data, procedures, security protocols, and of course the well-meaning but imperfect human.

Every IT professional can tell a horror story about an upgrade, roll-out, or migration gone awry. So many factors are involved; hardware, software, compatibility, timing, data, procedures, security protocols, and of course the well-meaning but imperfect human.