Customizing Imaging Algorithms

Consider the following conflicting factors involved in disk imaging:

§§ A high number of read operations on a failed drive increase the chances of recovering all the data, and decrease the number of probable errors in that data.

§§ Intensive read operations increase the rate of disk degradation and increase the chance of catastrophic drive failure during the imaging process.

§§ Imaging a drive can take a long time (for example, one to two weeks) depending on the intensity of the read operations. Customers with time-sensitive needs may prefer to rebuild data themselves rather than wait for recovered data.

Clearly these points suggest the idea of an imaging algorithm that maximizes the probable data recovered for a given total read activity, taking into account the rate of disk degradation and the probability of catastrophic drive failure.

However, no universal algorithm exists. A good imaging procedure depends on such things as the nature of the drive problem, and the characteristics of the vendor-specific drive firmware. Moreover, a client is often interested in a small number of files on a drive and is willing to sacrifice the others to maximize the possibility of recovering those few files.To meet these concerns, the judgment of the imaging tool operator comes into play.

Drive imaging can consist of multiple read passes. A pass is one attempt to read the entire drive, although problem sectors may be read several times on a pass or not at all, depending on the configuration. The conflicting considerations mentioned above suggest that different algorithms, or at least different parameter values, should be used on each pass.

The first pass could be configured to read only error-free sectors. There is a fair possibility that the important files can be recovered faster in this way in just one pass. Moreover, this pass will not be read-intensive since only good sectors are read and the more intensive multiple reads needed to read problem sectors are avoided. This configuration reduces the chances of degrading the disk further during the pass (including the chances of catastrophic drive failure) while having a good chance at recovering much of the data.

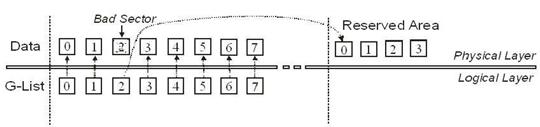

Second and subsequent passes can then incrementally intensify the read processes, with the knowledge that the easily-readable data have already been imaged and are safe. For instance, the second pass may attempt multiple reads of sectors with the UNC or AMNF error (Figure 2). Sectors with the IDNF error are a less promising case, since the header could not be read and hence the sector could not be found. However, even in this case multiple attempts at reading the header might result in a success, leading to the data being read. Successful data recovery of sectors with different errors depends on the drive vendor. For example, drives from some vendors have a good recovery rate with sectors with the IDNF error, while others have virtually no recovery. Prior experience comes into play here, and the software should be configurable to allow different read commands and a varying number of reread attempts after encountering a specific error (UNC, AMNF, IDNF, or ABRT).

Drive firmware often has vendor-specific error-handling routines of its own that cannot be accessed directly by the system. While you may want to minimize drive activity to speed up imaging and prevent further degradation, drive firmware increases that activity and slows down the process when faced with read instability. To minimize drive activity, imaging software must implement a sector read timeout, which is a user-specified time before a reset command is sent to the drive to stop processing the current sector.

For example, you notice that good sectors are read in 10 ms. If this is a first pass, and your policy is to skip problem sectors at this point, the read timeout value might be 20 ms. If 20 ms have elapsed and the data has not yet been read, the sector is clearly corrupted in one way or another and the drive firmware has invoked its own error-handling routines. In other words, a sector read timeout can be used to identify problem sectors. If the read timeout is reached, the imaging software notes the sector and sends a reset command. After the drive cancels reading the current sector, the read process continues at the next sector.

By noting the sectors that timeout, the software can build up a map of problem sectors. The imaging algorithm can use this information during subsequent read passes.

In all cases the following parameters should be configurable:

- Type of sectors read during this pass

- Type of read command to apply to a sector

- Number of read attempts

- Number of sectors read per block

- Sector read timeout value

- Drive ready timeout value

- Error-handling algorithm for problem sectors

Other parameters may also be configurable but this list identifies the most critical ones.

Imaging Hardware Minimizes Damage

In addition to the software described above, data recovery professionals also need specialized hardware to perform imaging in the presence of read instability. Drive firmware is often unstable in the presence of read instability, which may cause the drive to stop responding. To resolve this issue, the imaging system must have the ability to control the IDE reset line if the drive becomes unresponsive to software commands. Since modern computers are equipped with ATA controllers that do not have ability to control the IDE reset line, this functionality must be implemented with a specialized hardware. In cases where a drive does not even respond to a hardware reset, the hardware should also be able to repower the drive to facilitate a reset.

If the system software cannot deal with an unresponsive hard drive, it will also stop responding, requiring you to perform a manual reboot of the system each time in order to continue the imaging process. This issue is another reason for the imaging software to bypass the system software.

Both of these reset methods must be implemented by hardware but should be under software control. They could be activated by a drive ready timeout. Under normal circumstances the read timeout sends a software reset command to the drive as necessary. If this procedure fails and the drive ready timeout value is reached, the software directs the hardware to send a hardware reset, or to repower the drive. A software reset is least taxing on repower method is most taxing. A software reset minimizes drive activity while reading problem sectors, which reduces additional wear. A hardware reset or the repower method deals with an unresponsive hard drive.

Moreover, because reset methods are under software control via the user-configurable timeouts, the process is faster and there is no need for constant user supervision.

The drive ready timeout can also reduce the chances of drive self-destruction due to head-clicks, which is a major danger in drives with read instability. Head-clicks are essentially a firmware exception in which repeated improper head motion occurs, usually of large amplitude leading to rapid drive self-destruction. Head-clicks render the drive unresponsive and thus the drive ready timeout is reached and the software shuts the drive down, hopefully before damage has occurred. A useful addition to an imaging tool is the ability to detect head-clicks directly, so it can power down the drive immediately without waiting for a timeout, thus virtually eliminating the chances of drive loss.

Next we will look at carving JPEG graphic files, as specified in the document “Description of Exif file format.” For complete details of the file format specification, please refer to the hyperlink to the document, listed on page 1 of this paper.

Next we will look at carving JPEG graphic files, as specified in the document “Description of Exif file format.” For complete details of the file format specification, please refer to the hyperlink to the document, listed on page 1 of this paper. Next we will look at carving MS Compound Document (and spreadsheet) files, as specified in the document “Open Office.org’s Documentation of the Microsoft Compound Document File Format.” For complete details of the file format specification, please refer to the hyperlink to the document, listed on page 1 of this paper.

Next we will look at carving MS Compound Document (and spreadsheet) files, as specified in the document “Open Office.org’s Documentation of the Microsoft Compound Document File Format.” For complete details of the file format specification, please refer to the hyperlink to the document, listed on page 1 of this paper. The first compound file format that we will look are Zip files, as specified in the document “APPNOTE.TXT – .ZIP File Format Specification”, revision date January 6, 2006 from PKWARE,Inc. For complete details of the file format specification, please refer to the hyperlink to the document, listed on page 1. The information described below applies to most common Zip files created with current versions of Zip archive utilities, such as WinZip.

The first compound file format that we will look are Zip files, as specified in the document “APPNOTE.TXT – .ZIP File Format Specification”, revision date January 6, 2006 from PKWARE,Inc. For complete details of the file format specification, please refer to the hyperlink to the document, listed on page 1. The information described below applies to most common Zip files created with current versions of Zip archive utilities, such as WinZip.