Physical damage

A wide variety of failures can cause physical damage to storage media. CD-ROMs can have their metallic substrate or dye layer scratched off; hard disks can suffer any of several mechanical failures, such as head crashes and failed motors; and tapes can simply break. Physical damage always causes at least some data loss, and in many cases the logical structures of the file system are damaged as well. This causes logical damage that must be dealt with before any files can be recovered.

Most physical damage cannot be repaired by end users. For example, opening a hard disk in a normal environment can allow dust to settle on the surface, causing further damage to the platters. Furthermore, end users generally do not have the hardware or technical expertise required to make these sorts of repairs; therefore, data recovery companies are consulted. These firms use Class 100 clean room facilities to protect the media while repairs are made, and tools such as magnetometers to manually read the bits off failed magnetic media. The extracted raw bits can be used to reconstruct a disk image, which can then be mounted to have its logical damage repaired. Once that is complete, the files can be extracted from the image.

Logical damage

Far more common than physical damage is logical damage to a file system. Logical damage is primarily caused by power outages that prevent file system structures from being completely written to the storage medium, but problems with hardware (especially RAID controllers) and drivers, as well as system crashes, can have the same effect. The result is that the file system is left in an inconsistent state. This can cause a variety of problems, such as strange behavior (e.g., infinitely recursion directories, drives reporting negative amounts of free space), system crashes, or an actual loss of data. Various programs exist to correct these inconsistencies, and most operating systems come with at least a rudimentary repair tool for their native file systems. Linux, for instance, comes with the fsck utility, and Microsoft Windows provides chkdsk. Third-party utilities are also available, and some can produce superior results by recovering data even when the disk cannot be recognized by the operating system’s repair utility.

Two main techniques are used by these repair programs. The first, consistency checking, involves scanning the logical structure of the disk and checking to make sure that it is consistent with its specification. For instance, in most file systems, a directory must have at least two entries: a dot (.) entry that points to itself, and a dot-dot (..) entry that points to its parent. A file system repair program can read each directory and make sure that these entries exist and point to the correct directories. If they do not, an error message can be printed and the problem corrected. Both chkdsk and fsck work in this fashion. This strategy suffers from a major problem, however; if the file system is sufficiently damaged, the consistency check can fail completely. In this case, the repair program may crash trying to deal with the mangled input, or it may not recognize the drive as having a valid file system at all.

The second technique for file system repair is to assume very little about the state of the file system to be analyzed and to, using any hints that any undamaged file system structures might provide, rebuild the file system from scratch. This strategy involves scanning the entire drive and making note of all file system structures and possible file boundaries, then trying to match what was located to the specifications of a working file system. Some third-party programs use this technique, which is notably slower than consistency checking. It can, however, recover data even when the logical structures are almost completely destroyed. This technique generally does not repair the underlying file system, but merely allows for data to be extracted from it to another storage device.

While most logical damage can be either repaired or worked around using these two techniques, data recovery software can never guarantee that no data loss will occur. For instance, in the FAT file system, when two files claim to share the same allocation unit (“cross-linked”), data loss for one of the files is essentially guaranteed.

The increased use of journaling file systems, such as NTFS 5.0, ext3, and xfs, is likely to reduce the incidence of logical damage. These file systems can always be “rolled back” to a consistent state, which means that the only data likely to be lost is what was in the drive’s cache at the time of the system failure. However, regular system maintenance should still include the use of a consistency checker in case the file system software has an error that may cause data corruption. Also, in certain situations even the journaling file systems can not guarantee consistency. For instance, if the physical media disk used delays the writing back of data or reorders it in ways invisible to the file system (for instance, some disks lie about the changes being flushed to the disk, saying they have been flushed when they actually haven’t) a power loess may cause such errors to occur (note that this is usually not a problem if the delay/reordering is done by the file system software’s own caching mechanisms). The solution is to use hardware that doesn’t report data as written until it actually is written or using disk controllers equipped with a battery backup so that the waiting data can be written when power is restored. Alternatively, the entire system can be equipped with a battery backup (UPS) that may make it possible to keep the system on in such situations, or at least give some time to have it shut down properly.

And BACKUP YOUR DATA is a good way to protect data.

But backup technology and practices have failed to adequately protect data. Most computer users rely on backups and redundant storage technologies as their safety net in the event of data loss. For many users, these backups and storage strategies work as planned. Others, however, are not so lucky. Many people back up their data, only to find their backups useless in that crucial moment when they need to restore from them. These systems are designed for and rely upon a combination of technology and human intervention for success. For example, backup systems assume that the hardware is in working order. They assume that the user has the time and the technical expertise necessary to perform the backup properly. They also assume that the backup tape or CD-RW is in working order, and that the backup software is not corrupted. In reality, hardware can fail. Tapes and CD-RW do not always work properly. Backup software can become corrupted. Users accidentally back up corrupted or incorrect information. Backups are not infallible and should not be relied upon absolutely.

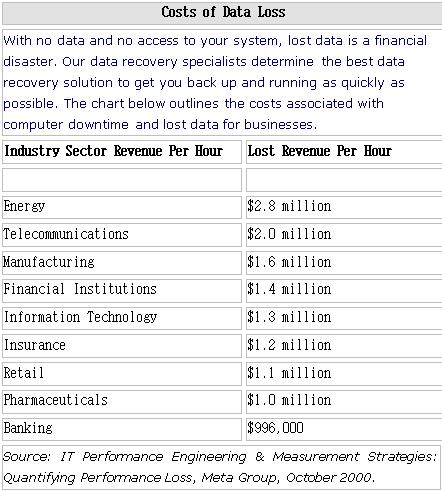

A data loss has occurred – now what? Determining the need to recover lost data can be a difficult one. There are several things to take into consideration when determining if data recovery is required.

A data loss has occurred – now what? Determining the need to recover lost data can be a difficult one. There are several things to take into consideration when determining if data recovery is required.